Motivation

As 3D Gaussian Splatting (3DGS) gains popularity in areas such as novel view synthesis and 3D reconstruction, the inherent uncertainties in using real-world data, which often arise from challenges in the training images like limited viewpoint diversity, occlusions, reflections, transparency, and featureless areas, are becoming increasingly evident and can impede the model's ability to generalize accurately. However, methods for estimating general reconstruction uncertainty in 3DGS scenes are currently lacking.

Method

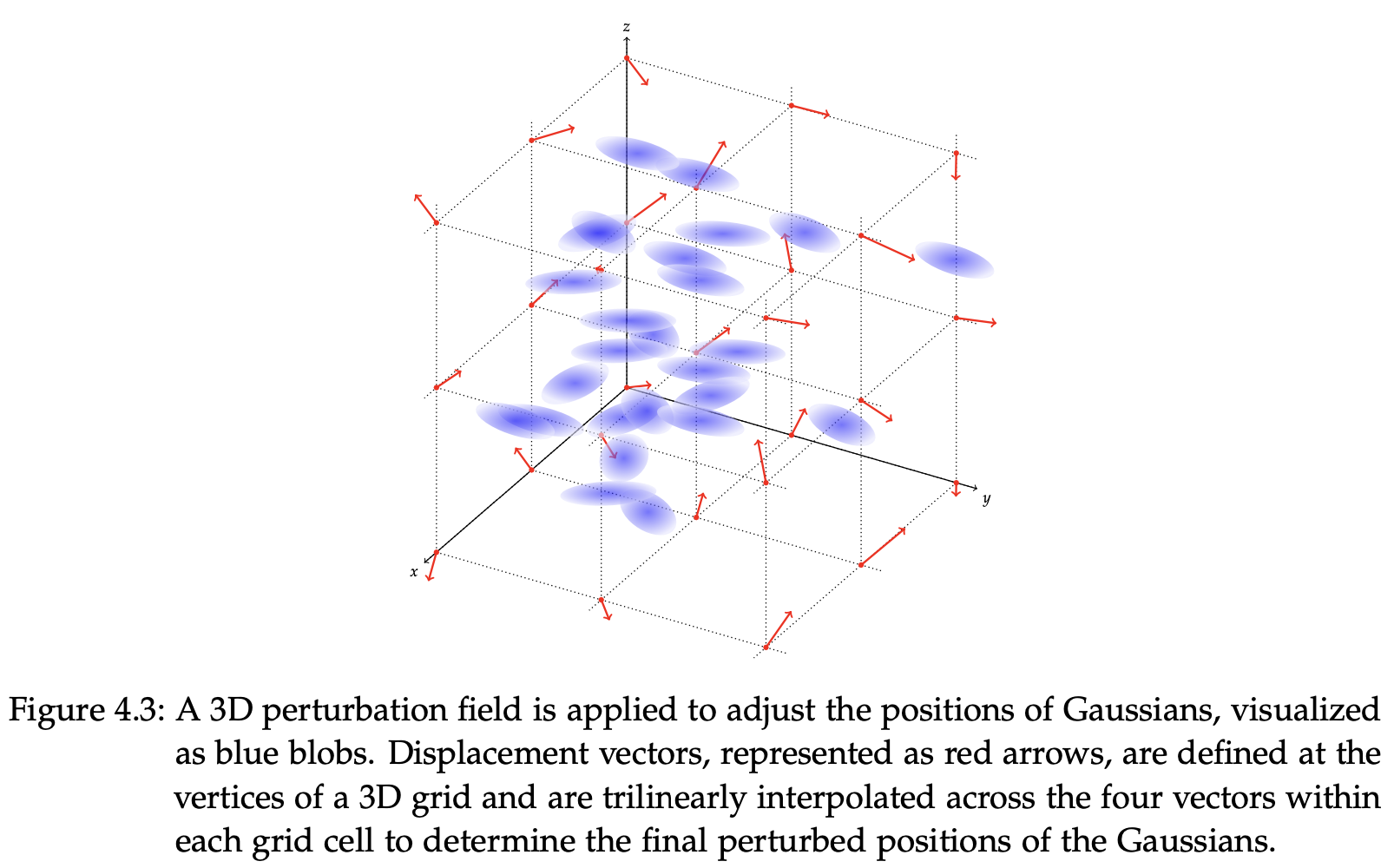

We present a method based on BayesRays that employs a spatial perturbation field and Bayesian approximation to estimate uncertainty in any pre-trained 3DGS scene without requiring modifications to the training pipeline. Our algorithm generates a spatial uncertainty field that can be visualized via an additional color channel. Furthermore, we explore alternative techniques for perturbation modeling and demonstrate that our method consistently delivers robust results across various datasets and scene configurations, outperforming previous approaches. Our approach equips researchers with a valuable tool for uncertainty-based decision-making, allowing algorithms to account for uncertainty and produce more reliable outcomes.

Results

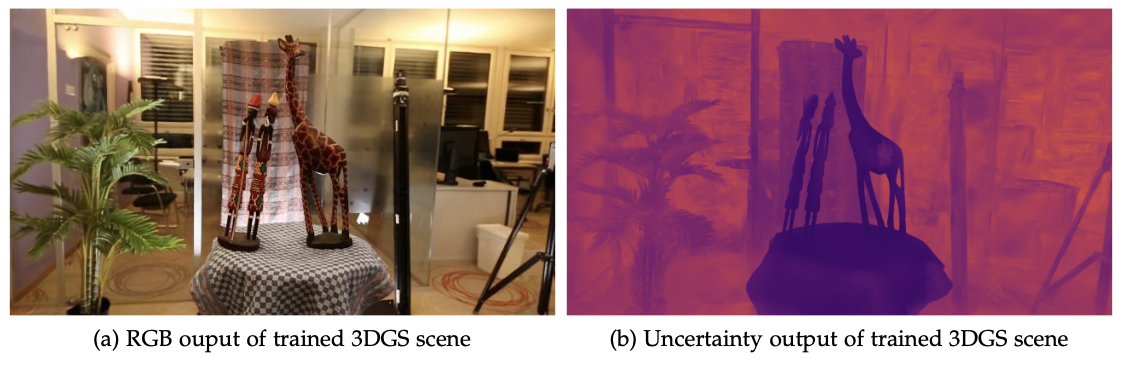

Qualitative results showing the rendered image from 3D Gaussian Splatting and corresponding uncertainty. Darker regions in the image exhibit lower uncertainty while brighter colors represent higher reconstruction uncertainty.